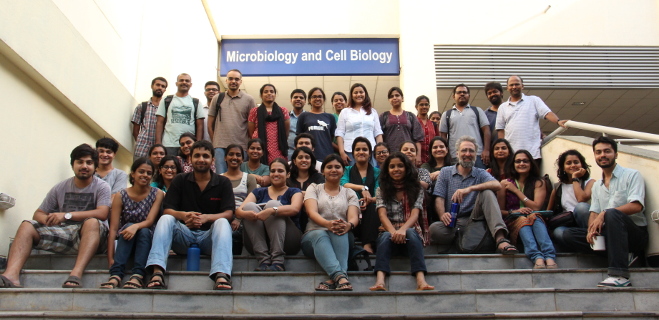

On the Appropriate Use of Statistics in Ecology: an interview with Ben Bolker Conducted on 30th June 2015 in CES, IISc.

Mon, 2015-08-03 16:17

Ben Bolker talks about learning statistics, common statistical mistakes which people make, statistical machismo, softwares and R, statistical philosophies, and his favourite papers and textbooks.

Hari Sridhar: I don’t think it would be wrong to say that most biologists dislike mathematics. At least in India, I known many people who chose biology in college to run away from mathematics. Ironically, if later on one wants to become a biologist, especially an ecologist, there is no getting away from mathematics and statistics. What would you say to such people – how can they develop a liking for numbers?

Ben Bolker: I think a good starting point is data visualisation. Looking at your data visually is fun and intuitive and a much better place to begin than by turning some abstract crank till the numbers pop out. Learning tools for visualising your data leads you into asking better questions about your data, because you are looking at the picture and you are getting practice in translating a scientific question into a question about the numbers, not just “how do I get a p-value out of these numbers as quickly as possible”. Having said that, I don’t think everyone is going to love statistics, I don’t think everyone should love statistics, I don’t think we should make everyone love statistics. As a biologist, you can avoid statistics if you are willing to stay in the lab and work with things that happen in large numbers, and under very controlled situations, and you don’t have to deal with confounding variables and spatial correlations and all that stuff. But if you want to try to tease out something delicate then you can’t avoid statistics. But even in that situation, you can reduce the need for complicated analysis by thinking carefully about your study design and coming up with a great experiment (or observational study).

H: For many of us, our first exposure to statistics was through doing statistical tests straightaway, without much of a conceptual understanding. Is that a good way to start or is it important to get a grounding in the fundamentals – probability theory, for example – first?

B: I don’t think the grounding in probability theory is really necessary. Yes, it is a mistake to run the test and to just look at the stars (p-values). You should first visualise the data, then run the test, then look at the numbers that come before the stars, interpret these numbers in the context of the visualization and in the context of the scientific question, and only then look at the stars. So, I think that just running the tests with complete tunnel vision is a mistake. But I think it should be possible to use statistics well without taking a course in probability or reading a book about probability. Crunching the numbers and playing around with data is not a bad place to start. Either your own data, or other people’s data that’s close enough to your own data to inspire you or to give you ideas about your own data. Introductory statistics books are often boring because they have examples that are completely unrepresentative of what we have to do. Part of the reason for that is to start with simple scenarios, and that in some sense is okay because you don’t want to jump into the deep end right away. But it would be nice to see more statistics books where all the examples were ecological.

H: Why do we need statistics in ecology?

B: Because the world is noisy. Because the world is noisy and because scientists are conservative. If I do my experiment and all 20 control animals die and all 20 treatment animals survive I don’t need statistics to tell me the treatment had an effect. But if 14 of the control animals die and 10 of the treatment animals die, well, I don’t know. Did the treatment have an effect? At some point you are going to have to draw a line, and you are also going to draw a line that the community will accept. You can’t just say “Well, it looks to me like my treatment was successful”. Statistics is the community standard.

H: What are the most common statistical mistakes that ecologists make?

B: There is a mistake that’s getting talked about a lot now: 'data-snooping' or 'fishing' or 'p-hacking'. All these are different names for the same problem – essentially, doing lots of statistical tests and pretending you did only one. The intentions behind it vary enormously - some people are doing it dishonestly and many people do it without realising that it is a problem. I think it is very deeply and closely related to the way we are taught to do statistics. For example, a very popular way of thinking about statistics is in the form of a minimal adequate model - assemble all the variables that you think might predict the response of interest, remove all the ones that don’t seem to be doing anything, and analyse the ones that are left. That’s a really bad idea. The problem is that all of the theory that p-values are built on assumes that you have specified the particular model or hypothesis you are testing in advance. It does not account for a situation in which you start with 200 predictors, throw out 195 and keep five. The p-value doesn’t mean anything here.

H: This is such a common way of doing analysis!

B: It is a very common way of doing analysis. I am torn about variations on this theme, like testing interactions, throwing out interactions that are not significant and keeping all the main effects. That makes me nervous, but I haven’t sat down and thought about how that biases the interpretation of the main effects. It is definitely not as bad as taking 100 main effects and throwing away 95 of them. But it might still be bad.

Pseudoreplication, which is the idea that you are measuring the same thing many times within a treatment and pretending that they are all independent replicates, is less common today because ecology went through a phase when it was extremely concerned with that particular problem. There was a little bit of a pendulum swing when observational ecologists studying questions at large spatial scales pointed out there are interesting ecological questions where replication just isn’t possible. There is a paper by Oksanen that follows up on the Hurlbert (1984) paper [the most well-known paper describing pseudoreplication for ecologists] that makes this point. I would say that if you are not able to replicate - say, if you are only able to measure something in two different watersheds that vary in one way - you at least need to be upfront about the problem. And then you will find a wide spectrum of opinions about it: 'You simply can’t do that'; 'You can do that but you shouldn’t put any p-values in the paper'; 'It’s OK to do that and put p-values in the paper as long as you explain clearly that they might not mean anything!'

H: What about interpretation - what are the common mistakes in statistical interpretation that people make?

B: I think the overwhelming mistake is the interpretation that two groups that differ in their significance for an effect are different. For example, you measure males and females and find the effect of weight on fitness to be statistically significant in males but not in females. From this you say that males and females differ in the effect of weight on their fitness. I see this all the time. It is a slightly more sophisticated version of the general ‘if the p value < 0.05 it’s real and if the p value is not <0.05 it’s not real’. My colleague and I have been trying to decide what to call that. We have not come up with anything better than 'the prime fallacy' so far, so we might try to promote that name.

H: Tell us a little more - why is this a problem?

B: Let’s say the slope of the relationship between weight and fitness in males is 1.5 and the p value is .049, and in females, the slope is 1.45 and the p value is 0.051. The slopes are very similar to each other but they happen to lie on opposite sides of our arbitrary threshold of distinction. What you should do is test the interaction between weight and sex, i.e. test the difference in the slopes, which is the statement you want to make. It’s true that the effect is significant in males and not significant in females, but if you are going to compare males and females the correct comparison is to test the interaction and show a picture – this comes back to visualisation – show a picture so that everybody can see that although there is a star on one line and not on the other line that the two slopes are practically the same.

H: Let’s talk a little more about ‘p < 0.05’. Where did this arbitrary threshold come from? Should we do away with it altogether?

B: There is a nice webpage, where somebody does a history of the 0.05 cutoff. Regarding whether we should do away with it: I am not sure. A test against a null hypothesis is useful because it is a first filter to say if the thing you are looking at is actually distinguishable from random noise. If you tell me only the effect size or the slope of the line and you don’t tell me the confidence intervals or the p-value then that’s really not useful. If you tell me the p-value and not the slope of the line that too is not really useful. So, I really need both pieces of information to interpret my data correctly. Of course, there is the problem of journals placing too much emphasis on significant results, which in turn leads to the temptation to manipulate data to make results significant. But if we do away with the 0.05 threshold, people will come up with some other equally arbitrary rule to follow in a mechanical way. People don’t want to think and just find it simpler to have a yes or no answer. I completely understand people not wanting to do statistics but it bothers me when they don’t even want to think about their results.

H: Going back to the problem of data-snooping or p-hacking: how do we rectify the situation? Should journals insist that authors provide a full documentation of their analysis, including those that didn’t find place in the final version of the paper? Should scientists decide, before they conduct the research, what analyses they are going to carry out?

B: Journals are getting more particular and demanding. Many journals require you to deposit data and document any non-trivial exploration of your data. There is a lot of discussion of pre-registration: before doing your study, signing up and actually stating in print what analysis you are going to carry out with your data. But I think it will be very burdensome to pre-register everything you are ever going to do and to have somebody in your lab watching what you do to make sure you are not cheating. I am a trusting person and I would like things to be less formal. But I certainly would want to know, when I read a paper, which statistical tests were confirmatory and which were exploratory. I think that a good goal is to think of analysis documentation more or less in the same way that lab notebooks work. People do have a sense that lab notebooks are inviolate and that everything done in the lab needs to be written in the lab notebook. I certainly think that a similar system is required for data analysis.

H: Planning your analysis before data collection will also help in knowing how much data is required?

B: Yes, a power analysis is always very useful. In North America, presumably in the rest of the world too, if you are doing experiments with vertebrates, you are required to submit a power analysis before you start the experiment, to get an approval from the ethics committee to kill or frighten or do whatever you are doing with the animals. Ethics boards are just as unhappy about overpowered tests as underpowered tests, because if you do an underpowered test the lives of all the animals you used are wasted and if you do an overpowered test you are unnecessarily killing more animals than you need to.

If you think you are going to need complicated statistics, doing lots of preliminary analysis with simulated data is important. If you are doing simple statistics, then, deciding what you are going to do, in principle, should be easy and calculating the power should be easy. For example, for a t-test or a one-way ANOVA, a power analysis won’t take you more than an hour. I think it is entirely worthwhile to spend that hour especially if you are going to be spending months or years in the field collecting data. But I must say that even I do it less than I should.

H: You make a distinction between confirmatory and exploratory statistical tests. Can you explain this a little more?

B: Confirmation means that you have specified the hypothesis in advance, you have got one model, you run a test and get the p-value and that’s it. A test is truly confirmatory only if you made all the decisions before you looked at the data. Exploration, of course, is self-explanatory – you play around with the data in many ways, without specifying a particular hypothesis in advance. I think exploration is very important. It is insane to collect all that data and do only one thing with it and throw it away. There is a third category too – prediction. When you do prediction there are methods for adjusting parameters in a principled way – similar to multi-model averaging in AIC that will give you better prediction perhaps at the cost of having a known and reliable p-value.

H: What is your response to Brian McGill’s views on ‘statistical machismo’ – that people use more complicated statistics than required, journals force authors to use complicated statistics, and there is a case to be made for greater thought put into study design to reduce the need for complicated statistics.

B: I agree with all three! My only quibble with McGill’s post is that it is relevant mainly in the context of strong ecological effects, where issues like phylogenetic correlation and detectability might indeed not matter much to the qualitative answer. But I know a lot of people in ecology who study weaker effects!

The other thing is that one person’s statistical machismo might be another person’s adequate statistics. I think editors and journals being dogmatic about the issue is a problem. But if, for example, you come to with me with a blocked design, and say you don’t feel like dealing with the experimental blocks, I am going to say that’s not okay. We should have a conversation and you might convince me that you can pretend that the data are independent and it will be all right and I might be willing to consider approximate or simpler statistics. But I am worried about a back-swing along the lines of: ‘Well, I don’t have to do that because Brian McGill said that simple statistics were okay’.

H: What is your view on the use of statistical softwares that provide tests at the click of a button, and where the user has no idea of what’s happening in the background?

B: The key is what you mean by ‘no idea of what’s happening in the background’. I think if you understand what’s going in and what’s coming out, if you understand the assumptions being made and the numbers that the software spews out, then I think you may well be okay. It’s when you want to go straight to the stars, and not think what the numbers mean - we are all doing this at some level – that it is a problem. There is linear algebra going on in linear mixed-effect models that is very difficult to understand, that very few people understand. Is that okay? I think it is probably okay. I think there are two problems with button-pushing. First, it makes it easier to just look at the stars and not think about what you are doing. I also think that there is correlation driven in the other direction: people who don’t want to think about what they are doing would rather push buttons. So, the fact that most people who push buttons don’t know what they are doing doesn’t mean that pushing buttons is bad, it may just mean that pushing buttons is something that a certain kind of people are drawn to. The second problem with pushing buttons is that you are constrained by what the software can do. Instead of thinking about whats the best statistical approach for your specific question, you look at the menu of tests and pick the one which fits best, even if the fit is poor. I certainly think that’s harmful. But, the breadth of the statistical packages is always going up. It is now perfectly easy to run a generalized linear model by pushing buttons which wasn’t true sometime in the past. It is getting easier to run mixed models by pushing buttons. So that constraint is not as strong as it used to be.

H: A somewhat related question – how much of the maths that lie behind these tests should one know?

B: Here again, I am handicapped by the fact that I know a lot of the math. It is true that the more math you know the more deeply you will understand what’s happening, but it is enough if you have a good conceptual grasp of whats going on, even if you don’t understand the details. You need to know enough math to look at the numbers coming out of the machine and know what they mean. E.g. if the log odds of predation have increased by one unit, you need to know what that means and not just say that’s a big number with a bunch of stars after it.

H: What is your take on this ongoing debate on the best statistical approach – frequentist, Bayesian or permutations?

B: I think you can do perfectly good science with any of those philosophies if you use them well. Some things are easier and some things are harder depending on what framework you adopt. And as the technology gets better everything gets easier. But I really don’t see any particular need for people to adopt one philosophy or the other as long as they use the one they are using correctly. I can state advantages and if people look at those advantages and say ‘Oh I would like to do that’, that’s great, but if somebody is confident using one kind of statistics and it is solving their problems, I don’t see any reason they should move.

H: What influence has R had on the practice of statistics?

B: It’s been about 20 years of R and S-PLUS existed before that. This is a bit of a statistician’s answer: it’s hard to say because I don’t know what the world would have looked like without R in these 20 years. It’s true that with R the level of sophistication of the statistics that people use has increased – it allows people to tailor analysis to their specific requirements - but I’m not sure that the level of fundamental understanding has changed. People are still taking short cuts and trying not to think about their analysis when they don’t have to.

H: How does one begin to learn R?

B: Data visualisation, again, is a good starting point, along with analyses of relatively simple datasets. I do think Hadley Wickham’s new tool boxes are very useful and powerful. The tricky thing about these tool boxes is that they are very self-contained and so when you want to do something that isn’t readily available in them you have to fall back to base R.

There is now lots of stuff on the web, more and more R user groups and places you can go and sit with people and complain or get help. How to get into R depends very much on personality - whether you want a very structured introduction or want to just dive in and start trying things.

H: Can you name a few ecology papers that are examples of good usage of statistics?

B: Personally, I am really drawn to papers that combine theory and statistics in a powerful way. Steve Ellner’s papers on ecological time-series analysis (e.g. Ellner et al. 1998; Childs et al. 2003; Hiltunen et al. 2014) are a personal favourite.

H: What are your favourite statistics text books?

B: I have a bunch of books that I like to triangulate between. I think that Crawley’s book does a good job of covering the basics, as do Alan Zuur’s, but I don’t think they take a strong enough principled stand about things like data snooping. For example, they advocate the minimal adequate model. But the good thing about them is that they deal with realistic ecological situations. I very much like McCarthy’s “Bayesian Methods for Ecology” textbook. So these are all from the ecology side. Frank Harrell’s and Gelman and Hill’s regression textbooks are not oriented towards ecology and are more technical than most ecologists are interested in, but the ideas in those books are wonderful - you can skip the equations and still get a lot out of these books. Philosophically, these are the books I most agree with and that I get the most out of.

H: Final question: it is still early in your trip - you have been here only for a couple of days – but, what are your first impressions about the students here?

B: They seem really good. And I think – this is again a statistical answer – when I go abroad, especially to developing countries, I am always blown away by the quality of the students. They want to learn, want to work hard, and really want to know what’s going on. Most importantly, they really want to understand for the sake of understanding it and not just to get their jobs done. There is a little bit of that, but the vast majority of students are well-prepared and really excited and eager to do more. It makes me happy.

Add new comment